High-volume reverse geocoding with Nominatim

A recent project required us to map a large number of GPS coordinates to their respective municipality names. This process, known as reverse geocoding, involved an initial dataset of over 3 million coordinate pairs from a few specific regions in Switzerland and its adjacent border areas, with about a thousand new pairs added daily.

Evaluating geocoding solutions: cost vs. control

When dealing with this volume of data, the choice of a geocoding service has significant implications.

Commercial services offer convenience and are simple to integrate. However, the costs can be substantial. Based on the public pricing calculator of a major mapping provider, our 3 million lookups would cost approximately $6,500, with ongoing monthly costs of around $100 for the daily additions. Furthermore, licensing terms often place restrictions on the permanent storage and reuse of the results.

Free services, such as the one offered by the Swiss government, are valuable but might not be a complete fit if your data, like in our case, includes locations in neighboring countries.

This led us to explore Nominatim, an open-source geocoding engine that uses OpenStreetMap data. While Nominatim provides a free public API, its usage policy is designed for occasional, non-bulk use, with a limit of one request per second. Attempting to process a large dataset through the public API would be slow and would not align with their fair use policy.

The most viable path forward was to follow Nominatim’s recommendation for power users: host our own instance.

A self-hosted Nominatim instance with a smart caching strategy

By hosting our own Nominatim server, we can address the core requirements of our scenario: processing a large volume of requests without rate limits and having unrestricted rights to store and reuse the resulting data.

Because our data is geographically concentrated around known regions in Switzerland, we could implement a “smart cache” to dramatically reduce the number of lookups required.

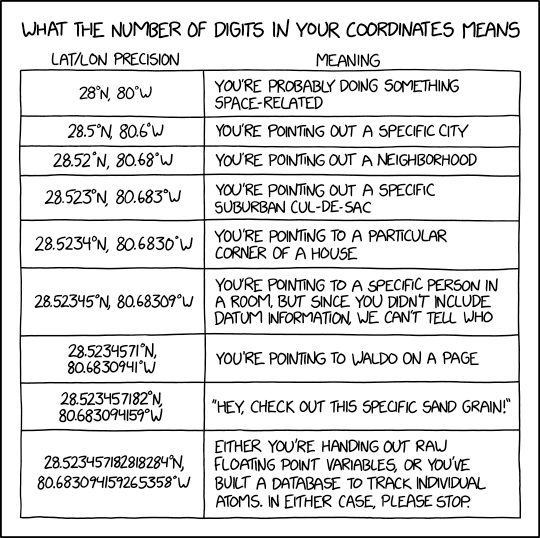

- Pre-computation and Caching: Instead of processing every coordinate individually, we created a comprehensive grid of points to cover the main areas of interest. These points were rounded to three decimal places, which added a mean error of approximately 36 meters. That is a fair trade-off between accuracy on the municipality level and cache size. We then combined this pre-calculated grid with any unique, rounded coordinates from our dataset that fell outside these primary zones. This approach reduced the initial 3 million coordinates to a set of approximately 200,000 unique points to be geocoded. We then ran this set through our local Nominatim server and store the results in a cache.

- Optimized Daily Lookups: For the thousand new coordinates added each day, we apply the same rounding logic. The vast majority of these points, once rounded, will already be in our pre-populated cache. For the few that are truly new, we query the public Nominatim server and add the result to the cache, continuously improving its coverage.

This pre-computation and rounding strategy is the key to handling a large volume of data efficiently.

Setting up a local Nominatim server

The official project provides detailed installation instructions. The process is well-documented for various Linux distributions.

As my development machine runs Windows, I used the Windows Subsystem for Linux (WSL) to set up an appropriate environment: wsl --install --distribution Ubuntu-24.04 --name Nominatim

A full Nominatim installation with worldwide data requires nearly 1TB of storage. However, our tutorial’s scope is limited. We can use regional data extracts from Geofabrik to significantly reduce the storage and processing footprint. In the following, we’ll focus on Baden, which is near the German state of Baden-Württemberg. Therefore, we will need data for both regions to ensure complete coverage. We can import the relevant files:

nominatim import --osm-file switzerland-latest.osm.pbf --osm-file baden-wuerttemberg-latest.osm.pbfAfter the import, we can start the server by running nominatim serve.

Verifying the local instance

To ensure our local server produces the same results as the public API, we can run a test query for an office location in Baden. We’ll request the municipality (zoom=10), specify the language (accept-language=de-CH), and use the stable geocodejson format.

Query against the public API:

$ curl "https://nominatim.openstreetmap.org/reverse?format=geocodejson&lat=47.4798817&lon=8.3052468&zoom=10&accept-language=de-CH"

# Returns "name":"Baden"Query against our local server:

$ curl "http://localhost:8088/reverse?format=geocodejson&lat=47.4798817&lon=8.3052468&zoom=10&accept-language=de-CH"

# Also returns "name":"Baden"The results are identical. Our local instance is functioning correctly, ready to handle requests without external dependencies or rate limits.

Grid scan around Baden

To showcase the performance, we’ll run a practical test: a reverse geocoding grid scan of the area around Baden. We can write a simple Python script to query 40,000 points in a 200 × 200 grid covering the region. For each point, we save the municipality and country name in a comma-separated values file.

import requests

import numpy as np

#url = f"https://nominatim.openstreetmap.org/reverse"

url = f"http://localhost:8088/reverse"

def get_city_and_country(lat : float, lon : float):

params = {

'format': 'geocodejson',

'zoom': 10,

'accept-language': 'de-CH',

'lat': lat,

'lon': lon

}

response = requests.get(url, params=params, timeout = 10)

data = response.json()

city = data['features'][0]['properties']['geocoding']['name']

country = data['features'][0]['properties']['geocoding']['country']

return city, country

with open("baden.csv", 'w') as f:

f.write('latitude,longitude,city,country\n')

for lon in np.arange(8.205, 8.405, 0.001):

for lat in np.arange(47.380 , 47.580, 0.001):

city, country = get_city_and_country(lat=lat, lon=lon)

f.write(f"{lat:.3f},{lon:.3f},{city},{country}\n")$ time python baden.py

real 3m29.410s

user 0m34.029s

sys 0m4.458sThe test completes in about 3.5 minutes on a standard laptop, which works out to roughly 191 requests per second. At this rate, processing 200,000 unique coordinates for a cache would take less than 20 minutes. This real-world performance confirms the viability of this approach. The script used for this is straightforward.

Result

The script above produces the following data file:

Let’s visualize the coordinates on a map. We can see the individual data points around our office:

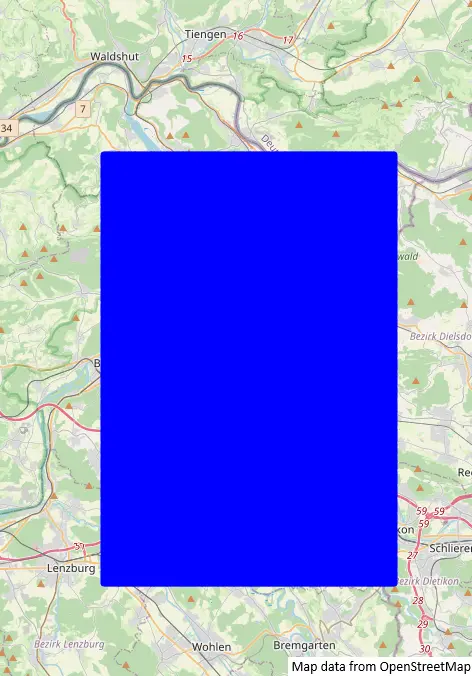

The grid density of 0.001° by 0.001° is sufficient to identify the municipality. For street- or house level identification, a finer grid would be necessary. If we zoom out, we can see the extent of data we covered:

The 200 × 200 dot grid appears oblong rather than square, since Switzerland is not on the equator. In Switzerland, a step of 0.001° in longitude only corresponds to a distance of 75m. A step of 0.001° in latitude corresponds to a distance of 111m (everywhere in the world).

Conclusion

We demonstrated that for tasks involving high-volume reverse geocoding, commercial services are not the only option. Hosting a private Nominatim instance presents a practical and cost-effective alternative.

The initial setup requires some technical effort, but combining it with a smart caching strategy like coordinate rounding can dramatically reduce the computational workload. This approach offers full control over performance, no rate limits, and unrestricted use of the geocoded data. We are grateful to the OpenStreetMap and Nominatim communities for providing the powerful open-source tools that make this possible.