Virtual Threads in Java: A Gateway to Cost-Effective Cloud Solutions

As cloud computing continues to grow, companies are constantly seeking ways to optimize their applications to handle more users, perform faster, and consume fewer resources. Traditional methods of scaling, such as adding more hardware or optimising existing code for better performance, often come with significant costs and complexity. In Java 21 we have a new way to manage thousands, or even millions, of threads with minimal overhead, thus providing a scalable and cost-effective solution for modern applications.

One of the most recent innovations in the Java platform, Virtual Threads, introduced in Project Loom, promises to revolutionise how we handle concurrency and parallelism. In this article, we will explore how Virtual Threads can help lower operational costs in cloud environments.

We will go through:

- Concurrency vs. Parallelism

- Threads in Java

- The Problem with Traditional Threads

- Scaling Solutions and Their Costs

- Blocking I/O

- Non-Blocking I/O

- The Promise of Virtual Threads

- Virtual Threads and Cost Reduction in Cloud Environments

Concurrency vs. Parallelism

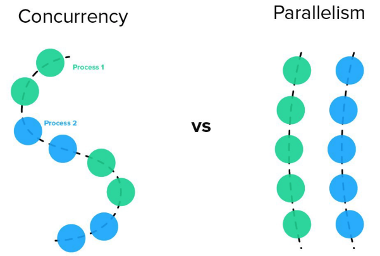

Before delving into the specifics of Virtual Threads, it’s important to understand the fundamental concepts of concurrency and parallelism, as they play a crucial role in application responsiveness and performance.

What is concurrency?

Concurrency refers to the ability of an application to handle multiple tasks simultaneously, making progress on more than one task at a time. This doesn’t necessarily mean that these tasks are being executed at the exact same moment. Instead, concurrency involves managing multiple tasks in a way that allows them to progress without waiting for one another to finish completely. This approach improves the application’s responsiveness, as it can quickly switch between tasks, providing a smoother experience to the user.

What is Parallelism?

Parallelism, on the other hand, is a subset of concurrency where tasks are executed simultaneously, typically on different CPU cores. This means that multiple tasks are literally running at the same time. Parallelism is particularly beneficial for performance, as it allows for the full utilization of multi-core processors, enabling applications to perform complex computations faster. While concurrency improves responsiveness by managing multiple tasks effectively, parallelism boosts performance by leveraging simultaneous execution.

Threads in Java

Java, as a language, has been with us for over 25 years. From the very early days, it provided a straightforward abstraction for creating threads. Threads are fundamental to concurrent programming, allowing a Java program to perform multiple tasks or operations concurrently, making efficient use of available resources, such as CPU cores. Using threads, we can increase the responsiveness and performance of our applications. Creating a Java thread is easy and is part of the language itself.

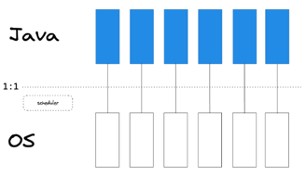

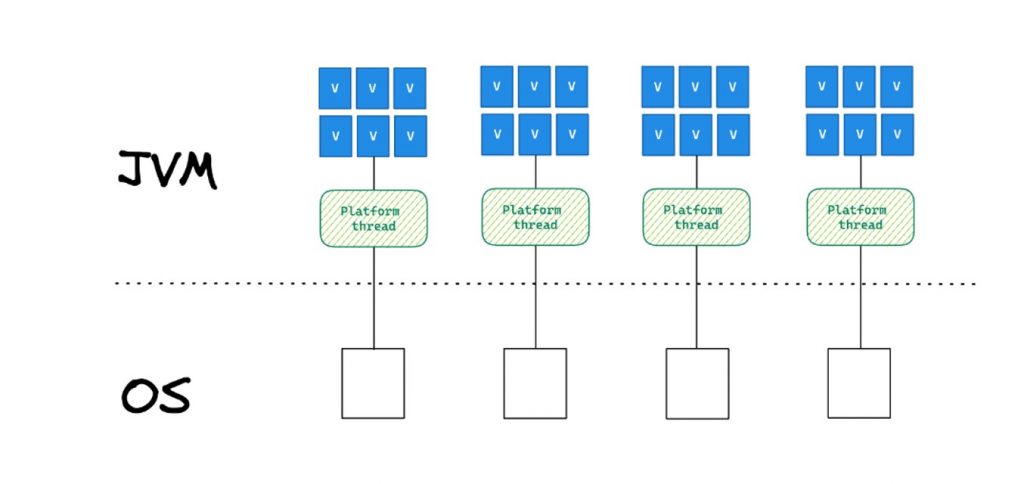

Java uses a virtual machine, commonly called the JVM (Java Virtual Machine), which provides a runtime environment for Java code. The JVM is a multithreaded environment, meaning more than one thread can execute a given set of instructions simultaneously, giving us an abstraction of OS threads (operating system threads). Many applications written for the JVM are concurrent programs like servers and databases that serve many requests concurrently and compete for computational resources.

The Problem with Traditional Threads

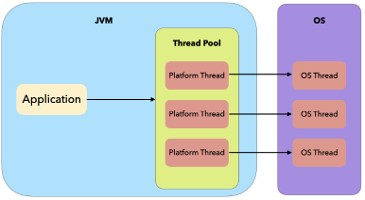

Before Project Loom, each Java thread was directly tied to an OS thread, known as a Platform Thread. This one-to-one relationship meant that an operating system thread only became available for other tasks when the corresponding Java thread completed its execution. Until that point, the operating system thread remained occupied, unable to perform any other activities.

The issue with this approach is that it is expensive from many perspectives. For every Java thread created, the operating system needs to allocate resources, such as memory, and initialise the execution context. These resources are limited and should be used cautiously. The Thread pool pattern is what helps us at this point. With it, we have a set of threads previously initialised and ready for use. There is no extra time spent on starting up a new thread because it’s already up, improving performance.

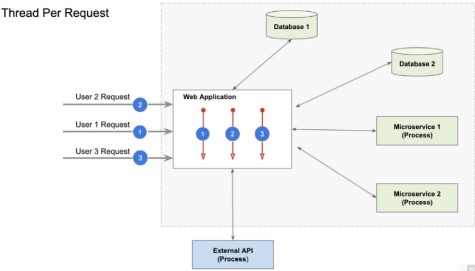

In a typical Java enterprise application following the thread-per-request model, when a user request comes in, the application server (e.g., Tomcat, WebLogic etc.) associates this request with a single Java thread from the pool. The thread handles the request from start to end. However, if a thread performs a blocking operation (e.g., talking to a database or communicating with a service over HTTP), it cannot return to the pool until the processing is complete. This is important, as already mentioned, there is a maximum number of threads that are allowed to be created, these are limited resources and can lead to exhaustion and performance issues, especially under high load.

The design and architecture of our application will depend on the number of concurrent users that the application is supposed to process. If there are millions of users, we must ensure that our application doesn’t overload its resources, like memory, CPU, database connections, and so on. When too many users are trying to use the application at once, it can reach a point where all available processing threads are in use. When this happens, any new requests have to wait until a thread becomes available. This can cause the application to slow down or even freeze if it’s handling a lot of traffic.

Scaling Solutions and Their Costs

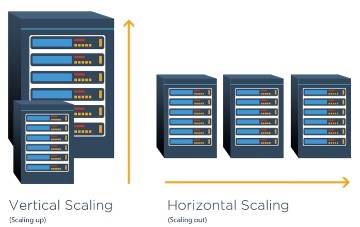

Before the existence of virtual threads, there were two options available for addressing scalability issues in concurrent programming in Java. One option was to add more hardware by scaling horizontally or vertically.

Vertical Scaling: Deploying the application on a more powerful machine, VM, or container. This involves increasing resources like CPU, memory, and disk space. However, this approach has its limits and increases costs, especially in a cloud environment and it cannot solve a very high scalability issue. We cannot solve a case where an application has to support 1 million users for example.

Horizontal Scaling: Increasing the number of application nodes. This approach has no limit but it’s costly. It involves adding more nodes as scalability needs increase, which has become a standard practice.

Vertical and horizontal scaling has become easier to implement, with the rise of cloud computing, companies have moved their applications to the cloud infrastructure. It also provides the ability to automatically scale horizontally based on many parameters like CPU utilisation. So, businesses don’t have to buy new hardware. They simply rent it for a short period of time.

So, you might think at this point the problem is effectively solved, and it’s true, the problem is solved, but the solution is way too costly. Renting or buying additional, more powerful machines is a costly endeavour, especially when it comes to supporting millions of users at a time. Cloud providers like AWS, Microsoft Azure, and Google Cloud benefit when more VMs are added, increasing its revenue. Therefore, it is crucial to optimize the application for the highest scalability possible before resorting to these scaling solutions. We must make sure that a single application is already optimized for the highest scalability possible before we start scaling vertical and horizontally.

One of the critical areas to consider for optimising an application is how it handles I/O operations. There are two main approaches: Blocking I/O and Non-Blocking I/O.

Blocking I/O

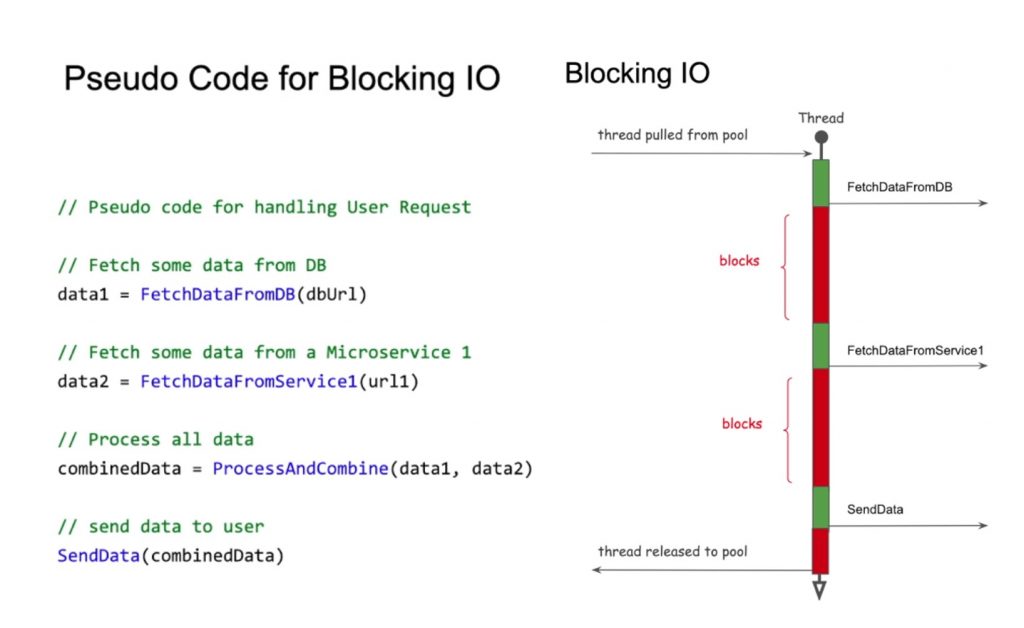

In a blocking I/O model, when a thread performs an I/O operation, it is put to sleep until the operation is completed. This approach is simple and straightforward, the execution goes sequentially and it’s easy to wrap your head around. But can lead to inefficient resource usage, especially under high load.

When you look at this diagram, you can see the thread executing from top to bottom, with time progressing downwards. The application server pulls the thread from the pool to execute the user request. During execution, the thread is sometimes scheduled by the CPU for processing and sometimes blocked for various reasons, the most common being I/O operations.

In the diagram, green indicates that the CPU is being utilised by the thread for processing. In contrast, red indicates that the thread is blocked due to I/O operations. For instance, when the thread makes a call to the database to fetch data, it turns red while waiting for the database to respond. This waiting period is considered wasted time for the thread since it cannot perform other tasks during this time.

You can see three blocking calls made by the thread. In other words, this thread, which could be used to handle a new incoming user request, is waiting for a blocked I/O operation. During these periods, the thread is idle, consuming unnecessary resources, as it is unable to perform other tasks while waiting for a response. Depending on the case, this response time can be 1ms, 1s, 10s, or more. The longer the response time, the longer the thread remains idle, blocking resources. This idle time represents a non-optimal use of system resources.

Advantages:

- Simplicity: Easy to write, read, and understand.

- Sequential Logic: Follows a linear flow, which is easier to debug and maintain.

Disadvantages:

- Inefficiency: Threads are idle while waiting for I/O operations to complete, leading to poor resource utilisation.

- Scalability: Limited by the number of threads that can be created and managed by the system.

Non-Blocking I/O

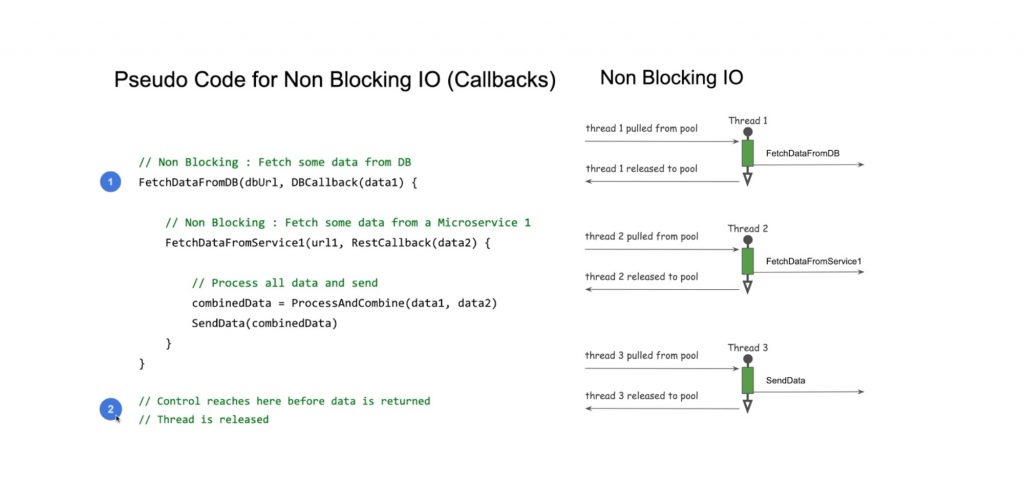

In a non-blocking I/O model, threads can initiate an I/O operation and then continue executing other tasks. This approach allows better utilisation of system resources and can handle a larger number of concurrent I/O operations. The programming paradigm changes rather dramatically. The application should use non-blocking API calls in the code instead of blocking API calls.

However, non-blocking I/O introduces complexity, this programming model is clearly more non-intuitive than what we, as developers, are used to. This is exactly why many developers have a hard time understanding non-blocking I/O. We are simply not accustomed to it. Although the non-blocking coding style does solve the problem of blocking threads, it comes at the cost of very high complexity for programmers. It’s easy to make mistakes and difficult to debug them. If we accidentally make a blocking call within one of the callback handlers, it won’t be easy to detect it.

Advantages:

- Resource Efficiency: Threads are not blocked and can perform other tasks while waiting for I/O operations to complete.

- Scalability: Can handle a larger number of concurrent operations, as it is not limited by the number of threads.

Disadvantages:

- Complexity: More difficult to write and understand due to the asynchronous nature and potential callback hell.

- Error Handling: More complex error handling and state management.

The Promise of Virtual Threads

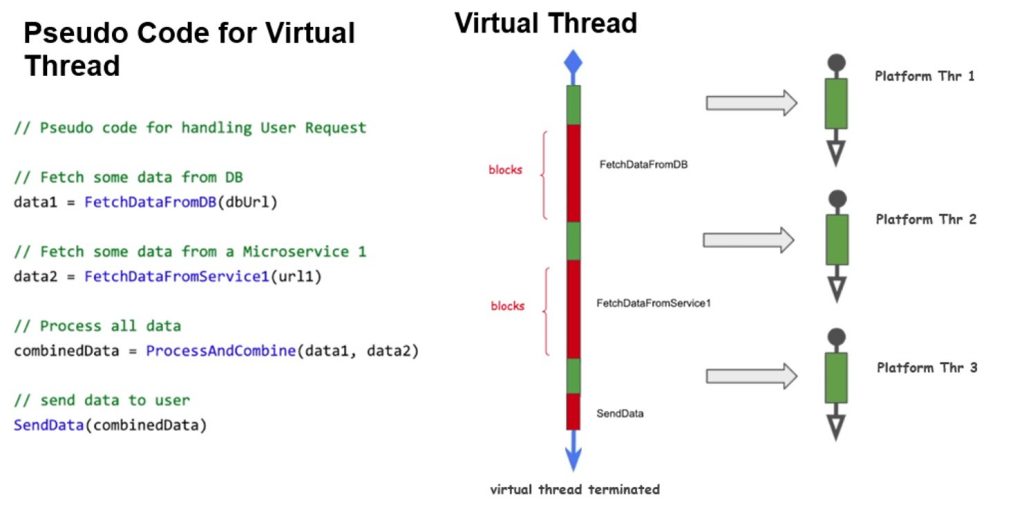

As of Java 21, developers have a new way to solve this problem. An entirely new implementation of Java thread has been created and it’s called Virtual threads. These lightweight threads do not block platform threads, making them highly efficient. This allows us to write imperative blocking code without significant concerns about scalability. Now the JVM can give the illusion of plentiful threads by mapping a large number of virtual threads to a small number of OS threads.

The application code in the thread-per-request style can run in a virtual thread for the entire duration of a request. The virtual thread consumes an OS thread only while performing calculations on the CPU. This results in the same scalability as the asynchronous style but achieved transparently. The syntax code remains familiar to developers, although the implementation of virtual threads is very different.

To use virtual threads, developers instruct the JVM to create the Virtual Thread instead of the traditional thread, typically in a single line of code. Virtual Threads extend the functionality of traditional threads and adhere to the same API contracts. This ensures that the rest of the code remains largely unchanged from traditional thread usage. Developers can leverage the benefits of virtual threads while maintaining the familiar syntax and programming patterns they are accustomed to. This seamless integration allows for easier adoption and adaptation of virtual threads in existing codebases, promoting efficiency and scalability without requiring extensive rewrites or adjustments.

Virtual Threads and Cost Reduction in Cloud Environments

Virtual Threads offer several benefits that translate into financial savings in cloud environments:

- Reduced Memory Overhead: Virtual Threads consume less memory, allowing more threads to run on the same resources, reducing the number of required instances and lowering costs.

- Processor Efficiency: Better CPU utilisation allows more tasks to be performed per unit of time, reducing the number of instances needed to support the workload.

- Scalability Without Proportional Costs: Virtual Threads enable creating millions of lightweight threads, eliminating the need to over provision infrastructure for peak loads.

- Reduced Latency: Applications with lower latency require fewer resources to achieve the same performance levels, resulting in operational cost savings.

Takeaways

Virtual Threads represent a significant innovation in the Java platform, providing an efficient solution to concurrency and parallelism challenges. In cloud environments, where resource efficiency directly impacts financial costs, adopting Virtual Threads can lead to substantial reductions in operational expenses. By embracing this new technology, developers and architects can create more scalable and cost-effective applications.

Furthermore, Virtual Threads simplify the development process by allowing developers to write straightforward, sequential code without sacrificing performance. This reduces the complexity associated with managing traditional threads, leading to cleaner, more maintainable codebases. Most importantly, by maximising resource utilisation, Virtual Threads can significantly reduce the number of instances required to handle the same workload. This optimization can result in considerable cost savings on cloud infrastructure, making it an essential strategy for businesses looking to lower their operational costs while maintaining high performance and scalability.